Student Assessment Improved by Building up a Question Bank in Canvas

Through frequent low-stakes testing, students engage in retrieval practice that improves their retention of information and scaffolds their learning of complex course concepts. But, creating, grading, and maintaining multiple quizzes for a course indeed requires some effort on the instructor’s behalf. Digital tools, such as Canvas, can be leveraged to both better support student learning and make effective use of instructors’ valuable resource, time.

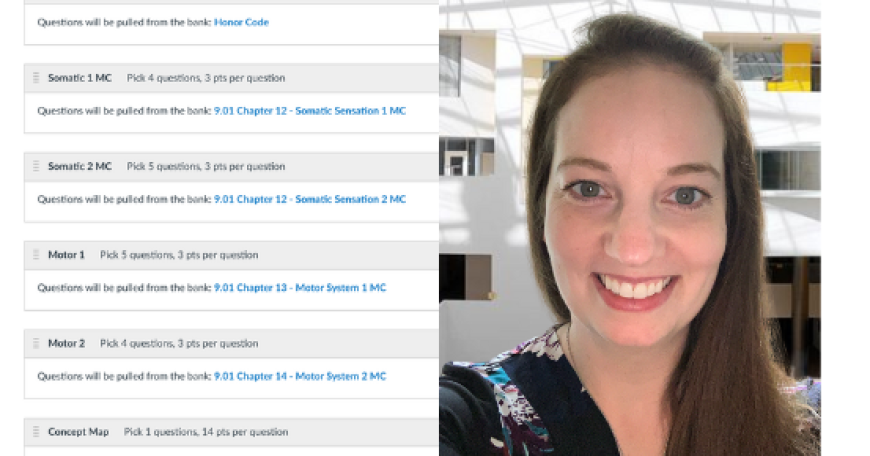

Senior Lecturer Laura Frawley, who teaches 9.01 (Introduction to Neuroscience) with Mark Bear and 9.00 (Introduction to Psychological Science) with John Gabrieli, used Canvas Question Banks to transition from relying heavily on high-stakes assessments to having more frequent quizzes distributed throughout the semester. The result has included student performance data (from quiz statistics) and a library of questions that are re-used and/or updated for future iterations of 9.01 and 9.00.

The quizzes

In 9.01, eight quizzes are distributed throughout the term, worth ~6.9% each for a total of 55% of the cumulative grade. The quizzes are untimed, open-book, with a 2–3-day window for completion, and consist of 18 questions (including multiple choice, multiple answer, matching, and fill-in-the-blank) and one concept map. Similarly, 9.00 has six quizzes worth a total of 40% of the course grade, with 18 multiple-choice-like questions and one concept map as well. Because the quizzes are on Canvas, students complete them asynchronously, and instructors can issue each student a unique, randomized quiz.

A quiz is typically released on a Friday with a Monday deadline. Laura Frawley then reviews the Canvas quiz statistics, and a day or so later she and the course TAs review the questions and results together (see paragraphs below). This allows the course team to release grades to students no more than three days after a quiz deadline. Students can see the correct answer for each question, and they are encouraged to seek clarifications during mandatory weekly recitations.

General timing of the quiz release through grading/feedback

The question bank & data

To set up the quizzes, a Question Bank was created in a Canvas non-class site available only to the teaching team. For a given quiz, new questions are created and added to the existing question bank groups for each lecture and corresponding chapter. Questions are also pulled from each existing Question Bank. The number of questions as well as the points per question are entered. Using a non-class site is not necessary though Laura Frawley prefers this method to keep the question banks independent from the course Canvas site(s). The non-class site serves as a master list of all question banks created over time, maintained in one place yet enabling current or future Canvas course sites to pull quiz questions from these non-class sites. Over the past three years, the question banks have expanded with new quiz questions that can be updated and/or remixed each term.

Quiz statistics on Canvas provide Laura Frawley with the opportunity to review students’ quiz outcomes while also indicating which questions need further refinement. The quiz statistics provide helpful data, breaking down each individual question and detailing what percent of students got 100% on the question and what percent of students selected each answer choice. Flagging questions where less than 70% of students answer correctly or where greater than 25% select an incorrect choice, for example, gives a collective sense of where students may need clarification or where questions were ambiguous (see examples below).

Example of a question from 9.01 for the teaching team’s review—although 70% of students answered correctly, 27% selected an incorrect yet reasonable answer choice.

Example of a question from 9.00 for the teaching team’s review—the third answer choice was ambiguous. It could be interpreted as (1) different patients show changes in different brain regions and (2) brain regions responding to behavior therapy and medication are different. Because of the ambiguity, points were given back to students for this answer option, and the question can be improved for future classes.

The Canvas discrimination index for each quiz question additionally helps mark whether a question is differentiating well between students who score higher on the quiz vs those who score lower overall. After each quiz, Laura Frawley and the teaching assistants use the quiz statistics as guides when they review quiz outcomes. They use Google Docs to share their feedback on whether a particular question is fair or ambiguous and whether they recommend giving points back to students. In addition to updating scores and clarifying concepts, the course team’s feedback on each quiz question helps to improve the question bank for the future.

Key takeaways

Technology enables effective and efficient quizzing & grading.

Digital tools, such as Canvas Quizzes and Gradescope, can be used to issue, grade, and review tests. Using such tools saves the time of manually grading assessments while also compiling performance statistics, which is especially helpful in large courses like 9.01 with 100+ students and 9.00 with 300+ students. While creating a bank of questions takes some initial effort, the ease of reusing, updating, or remixing the questions in the future is helpful to instructors. Laura Frawley also determined helpful paths for using Canvas quiz data: addressing student misconceptions at the next lecture or recitation, and improving the questions each year.

Re-thinking assessments as a tool for learning can better support teachers and students.

The move away from high-stakes exams in 9.01 and 9.00 means that students are no longer in a “make-or-break” scenario and instead have distributed quizzes and other assignments (i.e., a written assessment) to assess their progress. Students revisit lecture notes, their books, or other course material, and participate in mandatory recitation sections, all of which provide different means of encoding and rehearsing information. With quizzes distributed (and required) throughout the term, retrieval of information becomes a regular practice. The intentional design reinforces learning while also providing instructors with data on where additional clarification might be needed and how questions can be improved.

Want to use Canvas or integrated tools for quizzes but need help? Email ol-residential@mit.edu for a consultation.

Related resources:

-Video on Canvas quizzes and Question Banks

-Instructions on creating a Question Bank and linking a quiz to a Question Bank

More from Laura Frawley:

-Video on re-thinking assessment

-Article about concept maps